Raspberry Pi and edge AI development for embedded systems

Bluefruit Software has been delving into edge AI over the past 12 months.

Our Audio Classification Equipment (ACE) research and development project, which has received AeroSpace Cornwall funding, has seen us investigate adding edge AI to embedded systems.

In this post, we’re going to look at how and why we used a Raspberry Pi for proof of concept work on ACE and provide some advice on using Raspberry Pi boards for creating a proof of concept and prototyping.

What’s edge AI?

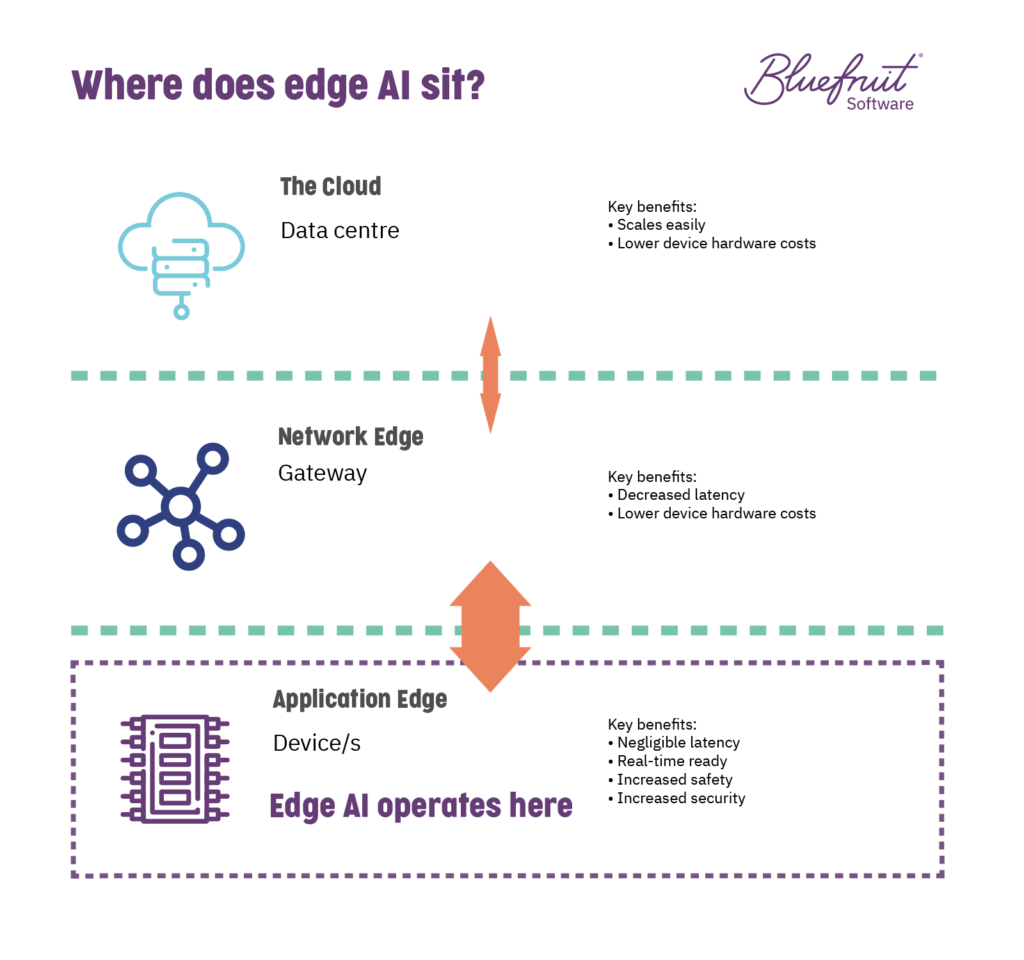

Here at Bluefruit, we see this flavour of artificial intelligence (AI) as AI that works on an embedded device and isn’t connected to a network or the internet. So, the AI is there on the device and can function without exterior network support, unlike the kind of AI you might find used in a device like an Alexa Echo Dot, which needs constant access to the internet to function.

An edge AI-enabled embedded system can take available data, give feedback, and make intelligent decisions without human operators.

An edge AI-enabled embedded system can take available data, give feedback, and make intelligent decisions without human operators.

Why even bother with edge AI?

AI offers many potential business benefits: from decreasing human error, automation of laborious or dangerous processes, and augmentation of existing human actions. For embedded systems, edge AI means systems which often run with little computing resource or have connectivity or security challenges, can have AI. Such systems can include medical devices and Industry 4.0 industrial systems.

(Want to know more about what edge AI could do for your product? Check out this post on key considerations and factors for edge AI in embedded software and hardware development.)

Bringing edge AI to a Raspberry Pi for ACE: benefits and challenges

ACE began as an R&D project to see if we could make AI work on an embedded system with limited computing power and connectivity.

In terms of the software involved in building this AI, the ACE project team used a combination of Fast AI, TensorFlow and a few other software tools to build and train the neural network involved.

The benefits of using a Raspberry Pi

A whole series of reasons made the system appealing, including, but not limited to:

- Ubiquity. Since 2012, Raspberry Pi has become the WordPress of computing hardware. A Raspberry Pi board’s limitation is what you are capable of coding (pretty much), and so its versatility is nearly endless. The overall support available for the Pi range is immense, with many, many software libraries already public, and hardware add-ons, which meant our team could get started fast and quickly begin iterating.

- Experience. We’d used Raspberry Pi devices before in proof of concept work for several clients. And so several teams already had experience in using them for this kind of work where we wanted to see if something was possible.

- Substitution. At the early stages of the ACE project, we just wanted to see if we could get AI to work in an embedded environment without worrying about the hardware so much. A Pi also has many hardware inputs and outputs already available, so as a single-board computer the Raspberry Pi straddles the edge between a classic embedded platform and more powerful desktop computers. It is also somewhat underpowered compared to a full-fledged desktop computer, and some AI-specific GPUs for robotics. For ACE, it acted as a reasonable stand-in during this stage before moving onto prototyping on a microcontroller like an STM32.

Also, as it was a project we wanted to demo and show off, the easy availability of touchscreens to go with the Pi was a deciding factor.

All of this made the Raspberry Pi a good fit for what we were trying to do. Though it still presented some challenges.

The challenges of making an edge AI proof of concept using a Raspberry Pi

We had to wrestle with a couple of things while developing for the Pi and setting ourselves up for moving on from proof of concept to prototype. Challenges included:

- Working in Linux. Developing on a Raspberry Pi, you’re working in a Linux (potentially embedded Linux) development environment. Not everyone on the team had embedded Linux experience, so we needed to help bring them up to speed on working within Linux.

- Python is the defacto development language of AI. While Raspberry Pi and Python are two things that go hand in hand, and many existing AI libraries use Python—Python is not a systems language. Thankfully, our team has Python experience, but while we did get up and running quickly for the project, to take things further we needed to transplant our learning to C and C++ so that it was suited to an actual embedded environment.

- Ensuring everyone was working to the same development environment. It was tricky generating the firmware images for the SD cards used in our fleet of Raspberry Pi. We needed to ensure that everyone had an identical image and that it was repeatable. We required everyone to have the same libraries and configuration, and so on, from developers to testers. Thankfully, we learned how to use Pi-Gen to develop identical, repeatable, firmware for all the Raspberry Pi devices we used.

- Having enough training data and somewhere to train our AI effectively. For many AI projects, training data availability and an extensive computing system to train in can get in the way of progress. Suppose you don’t have a good amount of computing resource already available to you. In that case, it can seriously increase the time needed to train the AI model, making it difficult to move quickly through a project. And having enough training data affects the accuracy of the AI model. But by using the supercomputing facilities at Goonhilly Earth Station’s AI Institute, we had somewhere that enabled speedy training. Plus, with tweaks to the neural net we used, we got the volume of training data we needed to a manageable volume.

- Documenting our AI. A significant aim for ACE was seeing if we could also generate edge AI that would meet specific business sectors’ compliance demands, like medical devices needing to meet IEC 62304. It continues to be a challenge for our ACE team. Still, it’s an area we have made good progress on, especially by using living documentation which is much supported by the Lean-Agile software development practices our teams already use.

If we started the project again from scratch, we would happily begin on a Pi again.

Where is Bluefruit Software going now with edge AI?

Our work on edge AI is far from over. We’re now in the prototype phase and we have shifted our focus to STM32-based platforms, where we are using the SensorTile.box devkit. Overall, we feel confident in working with ARM architecture for edge AI on embedded systems.

We’re also discussing with several clients how edge AI could enhance new and existing products.

Your team for AI in embedded systems

Are you looking for embedded software engineers and testers? Over the past 20 years, Bluefruit Software has delivered quality software to various sectors, including industrial, medical devices and scientific instruments.

Embedded software and edge AI insights

Did you know that we have a monthly newsletter?

If you’d like insights into software development, Lean-Agile practices, advances in technology and more to your inbox once a month—sign up today!

Find out more