Is Rust ready for embedded software?

Rust is a systems language that started life out at Mozilla Research, first appearing in 2010. Today it’s an open-source project that Mozilla sponsors. Rust has a growing community of advocates, from hobbyists to software engineers. Supporters claim that it can be used across a range of programming scenarios, including embedded software—but is Rust ready for embedded?

Why are we talking about Rust?

Rust has been building up momentum. More and more organisations are looking to make use of it (including Microsoft). Several organisations in the embedded space are already using Rust.

It may be easy to think that the interest in Rust is because it’s new. A plausible view in a computing field that has two core languages that are over 30 years old, with one nearing 50. (C is 47 and C++ is 34 at the time of writing.)

But it’s more than that. As E. Dunham (Operations Engineer, CloudOps, Mozilla) had to say of Rust in her talk Rust for IoT at linux.conf.au 2019:

“Rust attacks the apparent dichotomies between human-readable code, versus fast performing code. Ergonomic to develop code, versus verifiable, certified bug-free code…

“To write code free of certain classes of bug, in any language, you need to follow a bunch of rules. And what sets Rust apart is that these rules live explicitly in the language spec and in the compiler.” – E. Dunham, Operations Engineer, CloudOps, Mozilla

What does this come down to? Rust takes the good parts of C and C++, and programming practices that enable good quality code and bakes it in from the outset.

Or, at least Safe Rust does.

Safe Rust and Unsafe Rust

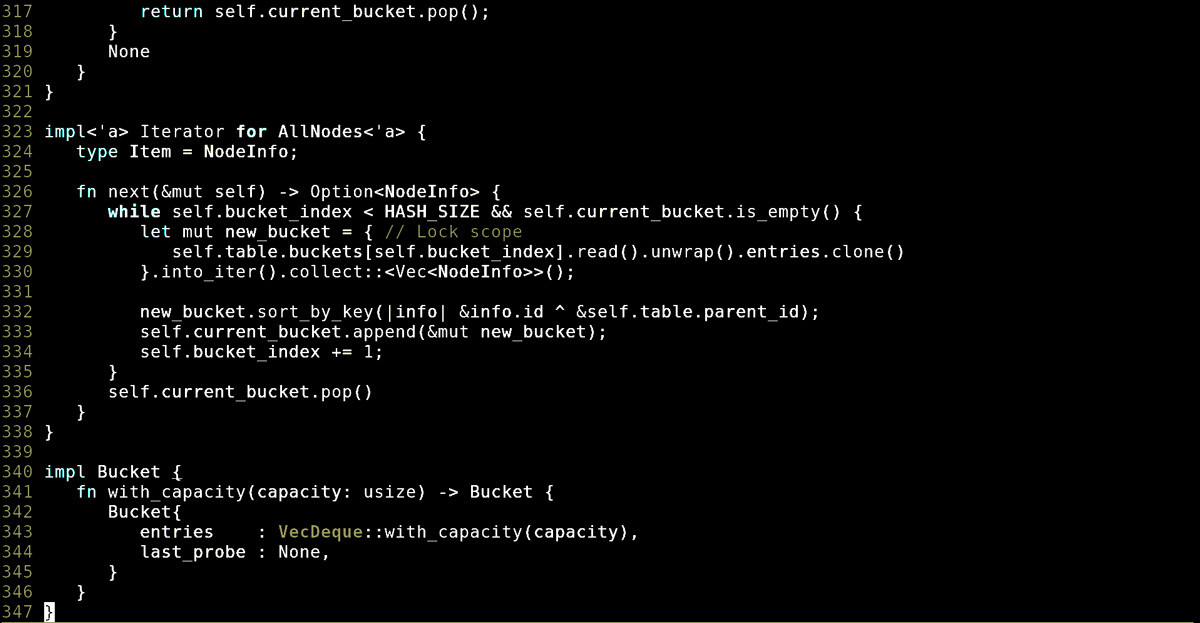

As James Micken eloquently put it (PDF), systems programmers live in a world without law—pointer wrangling and manual memory management are the ugly realities of low-level code. In embedded development, timing, space and memory constraints are so tight that automatic memory management is unaffordable. When you’re building the rules, you need the tools to break them. How does Rust approach this need for fine-grained control over system resources?

The answer is Unsafe Rust, a subset of the language that allows programmers to bypass certain—but not all—static safety guarantees. They do this inside blocks marked with the “unsafe” keyword. Another apt name could be “privileged” Rust, as it enables four key actions that can solve any low-level programming problem. Constraining them to marked blocks helps with visibility and enforcing design contracts.

In properly designed Rust, unsafe blocks are kept small and infrequent. Given they are the only place where memory corruption and Undefined Behaviour can occur, developers immediately know where to look when something goes wrong.

What bugs does Rust avoid?

There are three main types of bugs that Rust avoids:

- Memory bugs

- Concurrency bugs—specifically “data races”

- Consequences of Undefined Behaviour

Memory bugs

As opposed to other languages that promise memory safety, Rust does not perform any runtime memory management. Control over memory is in the hands of the developer, and as such, Rust’s performance is equivalent to C/C++, even in low-level, constrained environments. Rust’s approach to memory safety is built entirely on static guarantees. Rust’s compiler—specifically, a component called the “borrow checker”—is designed to enforce memory and resource ownership, ensuring that any misuse of memory is caught at compile time. Resource ownership has been part of the C/C++ programmers’ lexicon for decades as a good practice, but Rust formalizes it as part of the language semantics.

Safety is not the only benefit of Rust’s fail-fast design. In a world where 70% of all security vulnerabilities are the result of memory bugs, Safe Rust reduces the attack surface of any networked program. Older embedded solutions have been less exposed to malicious actors, but the rapid growth of Internet of Things (IoT) enabled devices means security can’t be an afterthought; it must be baked in from the start, and Rust enforces it.

Concurrency bugs

Multithreaded programming is hard. There is no single solution when it comes to concurrency bugs and certain classes of them. For example deadlocks, as in the classic dining philosophers’ problem, are unsolvable at a language level.

But there is one category of concurrency bugs that is absent in Safe Rust: data races. A data race occurs when multiple threads attempt to access a shared resource, and at least one of them is attempting to modify it. This often leads to subtle, difficult to diagnose problems since the consumers of the shared resource can access it in a corrupt state. In desktop computing, the contended resource is usually memory, but when it comes to embedded programming the field broadens. Peripheral access, sensors, actuators… all manner of hardware resources can be involved and fail in mysterious and creative ways.

The same enforced mechanism that prevents memory corruption is leveraged to prevent unsynchronized access to a shared resource.

Consequences of Undefined Behaviour

Undefined Behaviour (UB) is an often misunderstood quirk of language specifications. Particularly compiled languages like C and C++. The standards for these languages specify the result of certain incorrect actions being “undefined”. Since these actions are not expected to happen—given a correct program—the compiler implementer can do anything. Anything can be from carrying on in silence to failing. This leads to a particularly nefarious family of bugs, as they may appear or disappear depending on the specifics of the chosen compiler and architecture. UB is difficult to detect because sometimes it “just works”. This means that many C and C++ codebases often contain dozens of instances of it.

Undefined Behaviour is impossible in Safe Rust. Any operation that compiles has a well-defined set of outputs and side effects. While it is possible to trigger UB in Unsafe Rust blocks, the number of conditions that trigger it is significantly smaller than those in C and C++, and they are constrained to high visibility, critical code blocks.

Disadvantages of Rust

Target support

The Rust compiler rests atop the LLVM toolchain, much like the newer but constantly improving Clang C/C++ compiler. This means the language is limited to compile on architectures that LLVM supports. Thanks to decades of support and countless vendor-supplied toolchains, C++ and particularly C are available almost everywhere.

Here at Bluefruit Software, a great number of projects target the ARM architecture, which LLVM supports and therefore Rust, but some of the more exotic microcontrollers we work with will have to wait.

Compile time

Rust is notorious for its long compilation times. It is significantly longer than C and longer—though by a smaller margin—than C++. This coupled with the fact that Rust’s strictness forces developers to recompile more often, can lead to a loss in developer productivity.

The Rust team is working hard to reduce compilation times, but a fundamental aspect of this slowness is unavoidable: the Rust compiler is doing more work. It’s the price paid for the static safety and correctness checks, and whether the time lost is worth the debugging time saved is a call for the developer to make.

It is worth noting that compilation time is only a factor during development, the resulting executable code is just as fast as its C or C++ equivalent when running on the target processor.

Learning and training

While Rust’s syntax may on the surface appear close to C/C++, the semantics are different. This makes it a difficult language to tackle, particularly as it forces experienced systems language users to re-learn habits that the compiler considers harmful.

The quality of compile-time error messages in Rust is outstanding, which alleviates this problem, but everyone must fight the compiler sooner or later. You must consider the cost of bringing developers up to speed when assessing Rust as the language of choice for a project.

Developing Ecosystem

This is a general problem that ails any up-and-coming language. Thousands of C and C++ libraries exist that span every program problem domain, where Rust isn’t there yet. In choosing Rust for a commercial project, we need to ensure proven libraries are available for the task at hand.

At Bluefruit Software, we develop our Real-Time Operating System (RTOS) and drivers in-house, and many of the modules we usually delegate to external libraries (such as a file system, TCP/IP stack) have bare metal alternatives at various stages of development, as well as proven higher level options (such as over embedded Linux).

What about real-time and Rust?

Rust offers fine-grained control over memory and performance. It can be compiled to work on bare-metal systems by stripping away the standard library. All this means is it’s perfectly usable for real-time applications. By real-time we mean the strict or “hard” interpretation of the term, where tasks must be guaranteed to execute to a deterministic deadline.

What about debugging and TDD with Rust?

Debugger support is good, since debuggers look at compiled binary code and map that to source files through debug symbols which Rust generates all the same. The GNU Debugger (GDB) and LLDB Debugger (LLDB) support Rust debugging, and so do all tools that are built on top of them.

Test framework wise, Rust is somewhat opinionated when it comes to that and has a test framework built into the language. Rust is built with Test-Driven Development (TDD) in mind and it’s one of the main topics covered in the Rust book.

In order to help keep documentation alive and up to date, Rust recommends embedding tests in the documentation, so docstrings don’t compile if the code in their examples doesn’t comply anymore.

Rust is ready for embedded

Bringing Rust to the embedded arena relies on LLVM architecture support, which often means it’s not possible for older or more niche microcontrollers. But it’s an ideal candidate for newer, widely supported targets (like those with ARM architecture) provided there is enough library support for the task which the device is intended to perform.

It’s worth noting that in embedded, it’s important to fail early. Rust forces software to be correct at compile time. In other languages, issues may not be identified until a prototype is in the field or when the product has shipped. The discipline needed for development in Rust has the potential to reduce the cost of testing prototypes and the need for fixes after deployment.

Regardless of the language used for embedded software, what matters is that it’s the right one for the project—and that consideration should always come first.

Why is psychological safety critical to managing software risk?

Are you wondering what it really takes to prevent hazards and harms from embedded devices and systems? The tool many organisations are missing from their software development and product teams’ toolboxes:

Psychological safety.

You can download your copy of Why is psychological safety critical to managing software risk today. It doesn’t matter if you’re new to the concept or need a refresher; in our new ebook, you’ll learn about:

- What is psychological safety?

- The four stages vital to building psychological safety within teams.

- How psychological safety diminishes project risk.

- Project management practices that work well in organisations with psychological safety.

- How two businesses squandered psychological safety and paid the price for it.

Did you know that we have a monthly newsletter?

If you’d like insights into software development, Lean-Agile practices, advances in technology and more to your inbox once a month—sign up today!

Find out more