Got data? We are looking for R&D collaborators with audio data for our new Embedded AI audio categoriser

A few months ago, Bluefruit Software made the decision to invest in an internal Artificial Intelligence R&D project, exploring, training and developing our skills around neural networking and deep learning. We’ve placed a small but highly skilled team on the project with the goal of using a trained algorithm to autonomously and accurately categorise audio data, allowing for real time monitoring and diagnostics.

A few months ago, Bluefruit Software made the decision to invest in an internal Artificial Intelligence R&D project, exploring, training and developing our skills around neural networking and deep learning. We’ve placed a small but highly skilled team on the project with the goal of using a trained algorithm to autonomously and accurately categorise audio data, allowing for real time monitoring and diagnostics.

It’s important to say, this is not about Bluefruit software trying to become an AI company. We have no aspirations to open a BlueMind division at this point in time. Our goal is to be able to show our ability to apply deep learning AI technology to embedded devices for real world applications. This isn’t a whole new venture for us. We’ve previously delivered AI related projects for clients, including working on machine vision on medical devices and adding AI monitoring to an embedded system for remote diagnostics.

However, we are keen to do a lot more in this space and want to help our clients see how AI on an embedded device could be used to improve existing products or enable new functions. Critical to this, is the need to create something can run on a standalone device and work within the constraints of an embedded environment including low power, limited memory, processing power and connectivity challenges. Not one to shy away from a challenge, we are also attempting to make this project suitable for compliance critical spaces, with possible applications in medical, life sciences, or even aerospace. The goal is to have a working prototype within six months that is using real world audio data versus AI training sound libraries.

Where we’ve got so far: A delve into audio-based AI diagnosis

We’ve dubbed the project ACE (Audio Classification Equipment). As a Lean-Agile company we’ve broken the work down into phases and then further again into two week sprints. The goal of Phase One was to create a proof of concept using existing freely available open source audio libraries pre-categorised and intended for training neural nets. At the end of sprint four, we have a first Minimum Viable Product (MVP) of our proof of concept. While we are still working on additional functions and features, we thought it would be a good time to show our progress.

Cream teas to dog barks

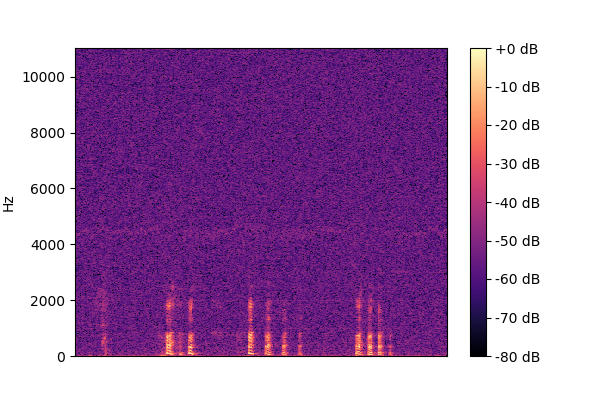

With an intensive amount of training and development time on our team, we started with a playful image-based algorithm that categorised cream teas into the right way (scone, then jam, then cream) or the wrong way (scone, cream, then jam on top). Once we were sure our scones were safe, we moved quickly on to our audio-based work, training a neural net to accurately classifying audio files converted to spectrograms (essentially, images of sound). Dog barks, belly laughs, clapping: we have got you covered.

Although we’ve previously integrated AI into an embedded device, this R&D work was our team’s first time training a neural net ourselves, building up all the layers required to continuously improve on the accuracy of the audio categorisation. Once we were sure the AI could tell the difference between a clap and a fire work, then the team ported it onto a standalone embedded device. Here’s our current demo using a Raspberry Pi 4:

Although we’ve previously integrated AI into an embedded device, this R&D work was our team’s first time training a neural net ourselves, building up all the layers required to continuously improve on the accuracy of the audio categorisation. Once we were sure the AI could tell the difference between a clap and a fire work, then the team ported it onto a standalone embedded device. Here’s our current demo using a Raspberry Pi 4:

Note: While we may build our own applications in the future, our team are currently using open source software for training ACE. In this demo we are using a combination of TensorFlow and fast.ai.

Training, training, training

Accuracy has always been a key driver for us on this project. We know how important accuracy is within safety critical sectors, especially when it comes to diagnostics and monitoring. In the early stages of the project with the “ESC-50: Dataset for Environmental Sound Classification”, we got to 88% accuracy on our test dataset. This was a great achievement given the previous top record on the repository was an 85%. However, we wanted to achieve better so continued to evolve our work. Within a few weeks we worked to specialise this dataset and model into a subset of sounds, subsequently boosting our accuracy up to 94%!

We need to get real

While our accuracy is performing extremely well, we’ve hit a roadblock. We said we wanted to train this AI so that it could be used in for real world applications in areas such as medical, industrial or aerospace.

Pre-recorded clean audio clips of dogs barking prepared for AI applications doesn’t help us prove real world viability. We want raw, real world sounds to give our neural net (and its training team) a real challenge.

So far we’ve not had much luck tracking down datasets with real-world applications that we can train with. While we are still exploring different options, we would much prefer to work with a collaborative partner on this. Ideally someone who is already collecting large data sets for research purposes and who would love some AI to help categorise it all for them.

We are not planning on launching a product or taking this work to market in a commercial way. The goal is pure R&D and to build up our knowledge in this space. So, we would be happy to work with research groups, universities, public sector organisations, non-profits, or product owners—basically anyone who might be interested in applying what we are doing to their work.

Do you have or know of any existing audio datasets that we could use to take ACE to the next level?

We are not very picky about what data we get. The direction of the next stage of the project will depend highly on what data we are able to work with and the needs of anyone we collaborate with. But we do appreciate an example will help provide a better idea of the type of thing we’d like to work on. So, for example, if we were to work with a life sciences, pharmaceutical, healthcare or medical company we would be interested in datasets of human-originating noises that have different categorised subtypes that could help us differentiate between normal and abnormal sounds. Sounds such as:

- Heartbeats

- Snores

- Coughing

- Breathing

- Throat clearing

- Stomach gurgles

In an ideal world, these datasets would be:

- Numerous (ideally several thousand or tens of thousands per subtype)

- Accurately categorised, so say if there are normal heartbeats in some clips and abnormal heartbeats in others, they’re categorised as normal heartbeats and abnormal heartbeats (this can be as simple as being put in a labelled folder per category)

- Clean audio, so not mixed audio types (not a conversation with coughing going on) or too much background noise

As we are trying to work with the in the world of compliance, these datasets must be:

- GDPR safe

- Has personally identifiable information redacted

- Have a license that allows commercial use

Human health sounds are just one route of course. We would be happy to work with audio data in any sector that has a real-world requirement for fast, autonomous, and remote monitoring and diagnostics.

Our dream dataset would be something which we can do something worthwhile with, solving a real world problem. It would be amazing if we could work with a partner to help make something that makes the world a better place, not just demonstrate that we can do AI.

If you can help us out, please get in touch with us using the form below.

Got data?

If you are interested in collaborating with us on this project or know where we might be able to find the data we are looking for, please send across your details. Or if you prefer you can email [email protected]. Thank you.

Learn more about embedded software development

Looking for an AI ready embedded team? You’ve found them

Bluefruit Software been delivering high-quality embedded software solutions, testing and consulting for over 20 years. We’ve worked across a range of sectors including medtech, industrial, agriculture and scientific. If you’re looking to bring AI to your product, we can help.

Did you know that we have a monthly newsletter?

If you’d like insights into software development, Lean-Agile practices, advances in technology and more to your inbox once a month—sign up today!

Find out more