What could edge AI do for your product? Key factors and considerations for embedded software and hardware development

What is edge AI and what are the considerations for bringing it to your product? We look at the potential of edge AI in embedded systems and key design considerations it poses for embedded.

What do we mean by edge AI?

In our time working on an internal R&D project, funded by Aerospace Cornwall, where we’ve been developing an embedded software application that makes use of edge AI, we’ve come to understand it as:

AI that runs on an embedded device—and isn’t necessarily connected to the internet or a more comprehensive network.

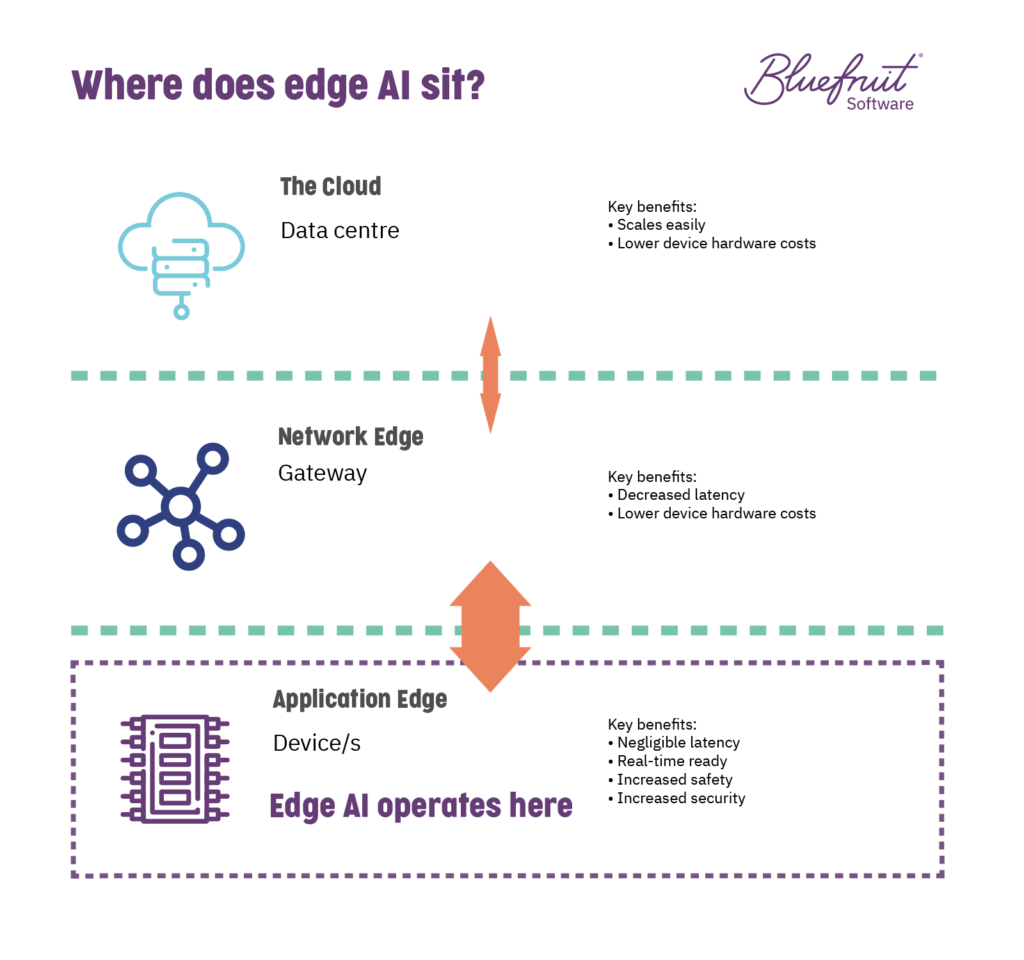

So, not Alexa. In that case, we see that as an embedded device that just captures data and sends the data up to the cloud for further processing. It’s a “dumb” product essentially. With Alexa devices (currently) on the network edge and their AI based in the cloud.

But our Audio Classification Equipment (ACE) R&D project that takes a sound sample and uses an AI model on the device itself to identify the source of the sound? That’s using edge AI.

Or an autonomous vehicle that uses AI on its systems to help it drive and react to changes in its environment without being reliant on sending that data to the cloud for processing? That we would also see as edge AI.

Or machines or robots on a production line that utilise self-monitoring enabled through AI, with the computation happening on the device, without vast amounts of data sent to a data centre? Also, edge AI.

Edge AI is about AI-enabled devices and systems making the most of the information available to them to help make intelligent decisions or to provide critical feedback to human operators, and doing all of this without having to send that computation elsewhere for it to happen.

Edge AI is about AI-enabled devices and systems making the most of the information available to them to help make intelligent decisions or to provide critical feedback to human operators, and doing all of this without having to send that computation elsewhere for it to happen.

The growth of edge AI

The potential of edge AI—artificial intelligence-driven computing on embedded systems—is immense.

And the market for it is enormous. How huge? Deloitte predicted that during 2020:

More than 750 million edge AI chips—chips or parts of chips that perform or accelerate machine learning tasks on-device, rather than in a remote data [centre]—will be sold.

But outside the numbers, there are many exciting possibilities made possible by edge AI. Applications for edge AI exist across a range of sectors because it promises to take back AI computing tasks from the cloud (from data centres).

Why even consider edge AI over AI in the cloud?

Factors driving AI towards the edge include:

- High roundtrip latency

- Limited bandwidth

- Limited connectivity

- Data centre cost

- Safety

- Data privacy and security

Bringing compute back to local hardware, means that it becomes easier to manage a host of software and hardware challenges present without sending data to data centres for compute, including:

- Latency: delays restricted to just hardware and software on the device, rather than subject to losing time with data heading to and from the cloud.

- Connectivity and bandwidth: devices don’t need to worry about having access to the internet or enough bandwidth to transmit data speedily.

- Safety: in having latency and connectivity minimised as an issue, it means that AI outputs are suited to real-time environments, which is vital in situations with only a single opportunity to get it right because it’s the real world and you’re at the mercy of physics.

- Security and privacy: as ever, with fewer connection possibilities, a device will be more secure against data interception, hacking and spoofing.

For many applications, being able to manage all of these is essential to having a functional version of edge AI or indeed any AI.

If AI-enabled software were part of large infrastructure systems, such as power and water, it would be essential for that AI compute to be happening at a local level. These systems remain mostly closed off from wider networks for a good reason.

In a factory environment, choosing edge AI over AI in the cloud is essential. If a company were to have AI-enabled systems everywhere, reporting on a multitude of things, monitoring what could be thousands of ongoing processes and making decisions in a single minute, the data involved would far outstrip what’s economically feasible to transmit to data centres.

Getting edge AI to work for you: what you need to consider

There is a host of software and hardware factors involved in bringing edge AI to any device. Getting the balance right between these concerns does mean making trade-offs.

Computing resources v cost

The challenges of embedded systems and software are still there. But the big one here for many product owners will be computing resources v cost. The more powerful the chips used, the more sensors involved, then the more resources and inputs available for an AI model but it will cost more to buy the hardware necessary to manufacture at scale.

Energy

If the device is low-powered or does not have consistent access to a power supply for charging, then running an AI model could present a considerable energy burden. The consideration here would be whether the energy burden is manageable, but that alternatives such as sending the compute to a data centre, or network edge, would be energy-intensive as well. And this still needs to be balanced with any latency or connectivity concerns.

Real-time

Should real-time be a necessity, then this is a reason to opt for edge AI when you need AI. The reductions in latency and connectivity gaps make it something that’s safer than relying on a data centre for handling the data processing.

Training data

Unless you’re building a self-learning AI that runs in a large cloud environment, you’re going to need data to train the AI model. For specific industry uses, it might be challenging to gain access to a large volume of data samples (whether that’s sensor data, vision, sound) for training the AI. But at the same time, it’s also worth considering what system data an AI might have access to. It could be that there is already data in an existing environment or being produced by a device that could be reused as an indication for state changes and so modelling and decision making.

It’s also essential to make sure that the code involved in training is as optimised as possible so that it makes the most of the training samples available. Doing this will improve accuracy, but also potentially decrease the number of training samples you need to train the AI.

Compliance

In the automotive and medical device sectors, for instance, there will be compliance requirements. To meet compliance with edge AI successfully, it will be vital to ensure that it’s not a “black box AI” where the inputs and operations are invisible to those who would need to audit it.

For instance, in the ACE project, we’ve worked hard to ensure that we developed Verification and Validation practices specifically for convolutional neural networks that align with IEC 62304.

Security

Even though edge AI is more secure against data interception, hacking and spoofing, it can still be affected by these issues. In safety-critical applications, design steps in hardware and software would be necessary to minimise the risks from data interception, hacking and spoofing.

You’ll also need to think about security when training the AI model itself. Poisoning attacks on data samples for machine learning are a genuine risk, and so you will have to be sure about the integrity of samples used to train an AI.

(BroutonLab has an exciting piece on machine learning vulnerabilities should you wish to read more about this.)

Find out if edge AI has potential: train and experiment

Before jumping headfirst into developing edge AI for a product, we believe the best thing for you to do is to have your team train and experiment with AI.

For our ACE R&D project, we chose to do a “spike” to investigate edge AI. (In Agile software development, a spike is a timeboxed investigation run by a development team.)

The team did some training and learning using fast.ai, and a few other resources, and based on these learnings, they experimented and developed the ACE prototype.

In working this way, it’s worth using a system like a Raspberry Pi 4, because it has a generous amount of resources while also having embedded constraints making it useful for proof of concept and prototyping.

And if you don’t have an embedded software team available?

You can come to a business like Bluefruit Software, and we can investigate for you if edge AI has potential for a new or existing product.

We’ve already carried out feasibility investigations for several clients.

Edge AI has great potential

From industrial manufacturing and industry 4.0 to driverless cars, to engine diagnostics and beyond, edge AI offers a vast number of possibilities in embedded systems.

Finding the balance between what you want to achieve, cost and design considerations are complex, but not impossible.

If you’d like to know more about developing for edge AI, check out our related links below.

Why is psychological safety critical to managing software risk?

Are you wondering what it really takes to prevent hazards and harms from embedded devices and systems? The tool many organisations are missing from their software development and product teams’ toolboxes:

Psychological safety.

You can download your copy of Why is psychological safety critical to managing software risk today. It doesn’t matter if you’re new to the concept or need a refresher; in our new ebook, you’ll learn about:

- What is psychological safety?

- The four stages vital to building psychological safety within teams.

- How psychological safety diminishes project risk.

- Project management practices that work well in organisations with psychological safety.

- How two businesses squandered psychological safety and paid the price for it.

Further reading

Did you know that we have a monthly newsletter?

If you’d like insights into software development, Lean-Agile practices, advances in technology and more to your inbox once a month—sign up today!

Find out more